A study done by the research firm Vanson Bourne shows that 80% of the Indian organizations have experienced data loss or downtime in the last 12 months. Historically businesses have been using tape-based backup solutions and storing them physically in offsite locations. However, according to Gartner, 50% of all tape backups fail to restore. These are shocking yet hard facts, and therefore, it’s imperative for the CFOs to look out for technology that offers a cost-effective, reliable and efficient Disaster Recovery solution.

The Eternal Illusion: “All is well”

A couple of months ago, a large number of computers in the Maharashtra secretariat got damaged in a fire. The government’s failure to have a proper disaster recovery (DR) plan drew widespread mockery and criticism. But ironically, this issue is not limited to just government offices in India. In Asia Pacific & Japan, 44% of the companies still rely on tape backups. However, a study done by Boston Computing Network (“Data Loss Statistics”) shows that 77% of those companies, who do test their tape backups, found backup failures.

But are companies planning wisely? The answer is a shocking “NO”. Research shows that 52% of the companies review backup and recovery plans only after a disaster strikes.

Looking Back: A Lesson from the Japan Crisis

The crisis that followed the massive earthquake in Japan last year highlighted the need for companies to strengthen their IT disaster readiness. As witnessed in Japan, many major roads were damaged and had to be closed which restricted transportation. For companies using tapes, this would result in a major issue that could affect tape transportation and tape-based recovery. Another significant challenge during the Japan crisis was power shortage. When the disaster occurred, the existing power supply was not enough to meet demand, so there was a need for rationing and control. As an example, although the earthquake was in Fukushima, Tokyo was affected by the imposition of a power shutdown even though it’s some distance away from the site of devastation. As companies weren’t prepared for this outage, they had to contend for intermittent power supply to both their primary and disaster recovery sites.

A robust disaster ready plan should therefore, be able to consolidate and automate data protection and recovery processes across the entire organization. It is extremely important for businesses to ensure minimal impact to their data in times of disasters which might deter business continuity.

Regulatory Environment in India

India has fast changing regulatory environment, where compliance requirements related to data protection and security are becoming tighter. Corporate acts and taxation law typically determine data retention schedules based on the type of records. Financial records must be retained for eight years at least, while the tax records must be kept for seven years.

The Reserve Bank of India (RBI) has released business continuity planning (BCP) guidelines for the banking sector. The RBI specifies technology aspects of BCP including high availability and fault tolerance for mission critical applications and services, RTO and RPO metrics, testing, auditing and reporting guidelines. The RBI also suggests near-site disaster recovery architecture in order to enable quick recovery and continuity of critical business operations.

India adopted the new data protection measures last year, called the Information Technology (Reasonable Security Practices and Procedures and Sensitive Personal Data or Information) Rules 2011. This is designed to protect ‘sensitive personal data and information’ across all industries. The new rules require that organizations inform individuals whenever their personal information is collected by email, fax or letter. Furthermore, they demand that organizations take steps to secure personal data and offer a dispute resolution process for issues that arise around the collection and use of personal information. The law applies to all companies in India getting any information from anywhere, which may also have implications for foreign companies that outsource services to India.

The Changing Technology Landscape

Tapes are archaic, unreliable and inflexible. In this traditional architecture, both backup and restore are too slow. On top of that, tapes require m anual intervention in the case of disaster recovery. In 2005, a prominent US-based financial institution reported a loss of backup tapes during transition which accounted for financial information of more than 1.2 million customers including 60 U.S. senators.

anual intervention in the case of disaster recovery. In 2005, a prominent US-based financial institution reported a loss of backup tapes during transition which accounted for financial information of more than 1.2 million customers including 60 U.S. senators.

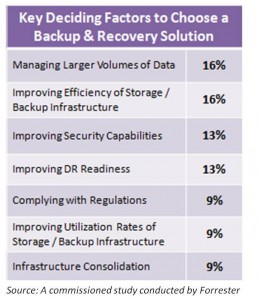

Data storage approaches currently vary widely. According to a study done by Forrester across 550 companies in Asia Pacific, disks are viewed most favorably in mature IT markets like Australia, Japan, Korea, New Zealand and Singapore, while internally managed off-site location is preferred in growth markets like China, India, Indonesia, Malaysia and Philippines.

Of late, there has been an increase in the adoption of Data Deduplication for many companies as they have been able to restore their critical data or applications in a matter of minutes rather than hours or days using the traditional tape-based recovery.

What is Data Deduplication?

As defined by techtarget.com, Data Deduplication (often called “intelligent compression” or “single-instance storage”) is a method in which one unique instance of the data is actually retained on the storage media, while the redundant data is replaced with a pointer to the unique data copy. For example, a typical email system might contain 100 instances of the same one megabyte (MB) file attachment. If the email platform is backed up or archived, all the 100 instances will be saved requiring 100 MB of storage space. With Data Deduplication, only one instance of the attachment is actually stored; each subsequent instance is just referenced back to the one saved copy. In this example, a 100 MB storage demand could be reduced to just 1 MB. Data Deduplication also reduces the data that must be sent across a WAN for remote backups, replication, and disaster recovery.

Benefits of Deduplication: Truly overwhelming!

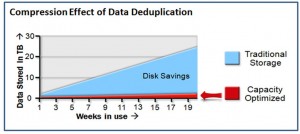

Data deduplication is a ground-breaking technology that changes the economics of disk-based backup and recovery in this era of data proliferation. It has helped companies reduce the data stored by up to 30x, backup windows from 11 hours to 3 hours, and the average restore  time from 17 hours to 2 hours, while optimizing productivity through Recovery Point Objective (RPO) and Recovery Time Objective (RTO).

time from 17 hours to 2 hours, while optimizing productivity through Recovery Point Objective (RPO) and Recovery Time Objective (RTO).

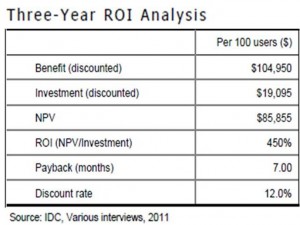

According to IDC, customers that use Data Deduplication will significantly reduce the overall data footprint under management, which will enable them to have more effectiv e and greener datacenter operations – resulting in reduced manpower, space and energy requirements. Deduplication decreases the overall cost per GB for disk, which is driving disk costs down to become equal to or less than tape costs.

e and greener datacenter operations – resulting in reduced manpower, space and energy requirements. Deduplication decreases the overall cost per GB for disk, which is driving disk costs down to become equal to or less than tape costs.

The average payback period for this technology is 7 months. The economic advantages of Deduplication are truly overwhelming if one also considers the improved recovery over LAN/WAN connections without requiring offsite tape retrieval.

The Road Ahead

Trends such as cloud computing, social technologies and mobility are driving major changes in the amount of data being generated as well as the types of data being stored (both structured and unstructured). At the same time, there has also been an increase in government and industry-specific regulations surrounding the privacy, accessibility, and retention of information. These are creating new challenges for backup and recovery processes. Hence, storing, accessing and leveraging business-critical data is becoming a strategic imperative for organizations of all sizes.

The Role of CFOs

The CFOs must partner with their CIOs to implement technology that ensures that the data can be retrieved when required. Lack of adequate technology infrastructure and governance can sometime cause irreparable damage to a company’s reputation, apart from attracting financial penalties as well as legal actions. The companies must also analyze their storage, backup and archiving approaches every 12-18 months. This is essential for not only ensuring compliance, but also for containing the cost of managing growing data volumes and meeting service level expectations of the business. As the corporate world moves from terabytes to petabytes, it’s time to move beyond generation and think about a smart disc-based backup solution.

This article was published in Information Week earlier this month. Click here to see the online version.